All of the ways to Deploy and Use VPCs in VCF 9 - Part 1

Virtual Private Clouds (VPCs) entered the scene in NSX 4.1 with little fanfare a bit under 2 years ago. At the time, the focus was centered on creating a solid foundation of multi-tenant building block components and introducing the concept to VMware customers.

In VCF 9, VPCs have been enhanced and become a “First Class Citizen” in the VCF Private Cloud. VPCs are integrated across the platform, and there are many options available for configuration and consumption.

In this series I will discuss all of the new ways you can utilize VPCs within VCF 9. In Part 1, I will start with an overview of the Building Blocks that enable the VPC functionality.

Building Blocks

There are multiple levels of tenancy built into VPCs - essentially different roles that have different responsibilities:

Components

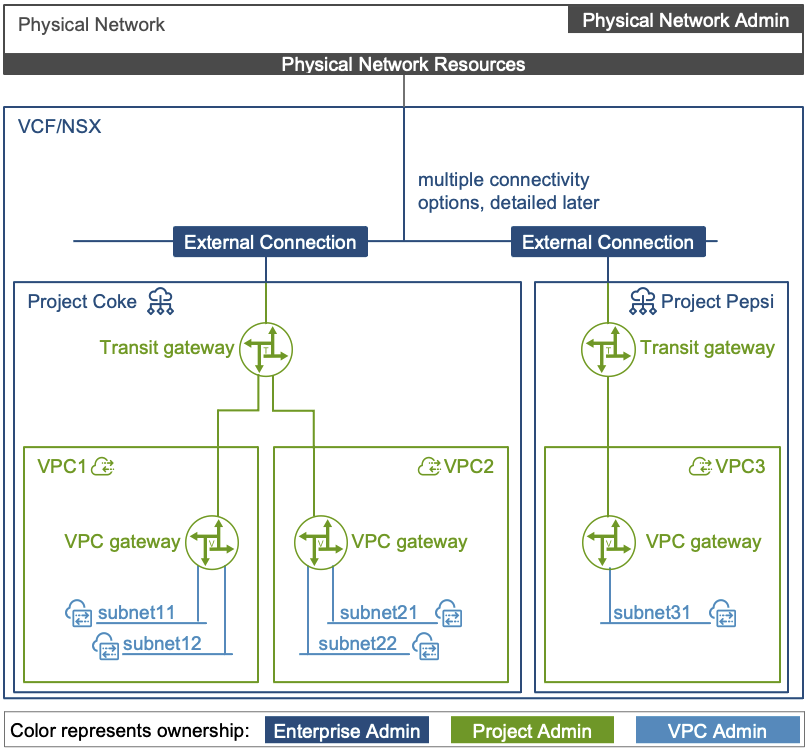

Project

Projects are like network tenants. They provide an isolated space where you can configure networking and security services and provide Quotas for objects within the Project. IP reuse is possible across Projects. Most importantly, you provision VPCs inside of a Project.

In VCF 9, there is a “Default” Project that is created so you can rapidly provision VPCs in many areas of VCF without going through extra steps to create a Project first.

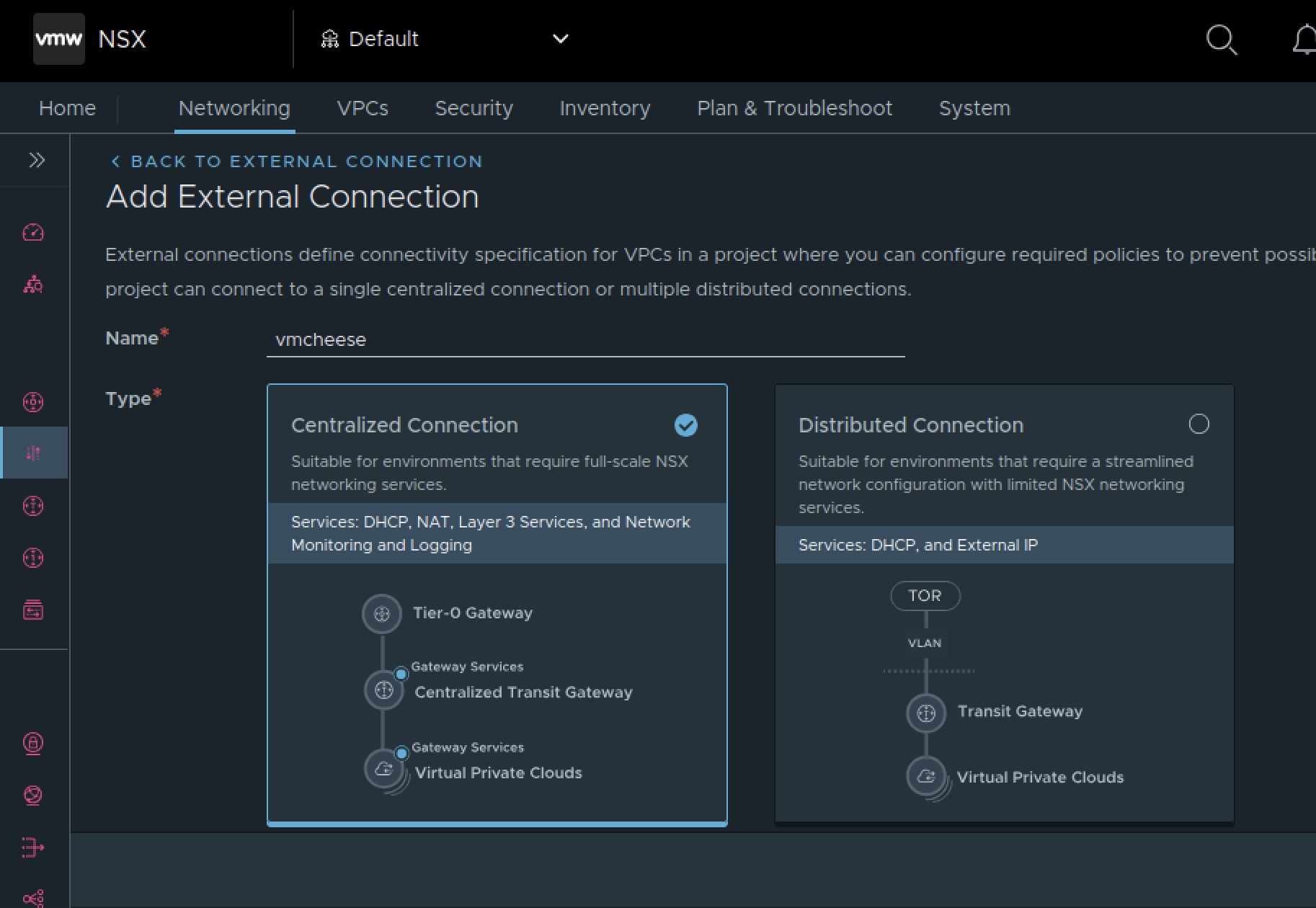

External Connectivity and Transit Gateways

In VCF 9, there’s an exciting new way to connect VMs to the physical network. Traditionally, there was a requirement to deploy NSX Edges and peer T0 Gateways to underlay networks using EBGP.

Although that option is still available and still has use cases that require it, there is a new distributed (read - “no edges required”) option!

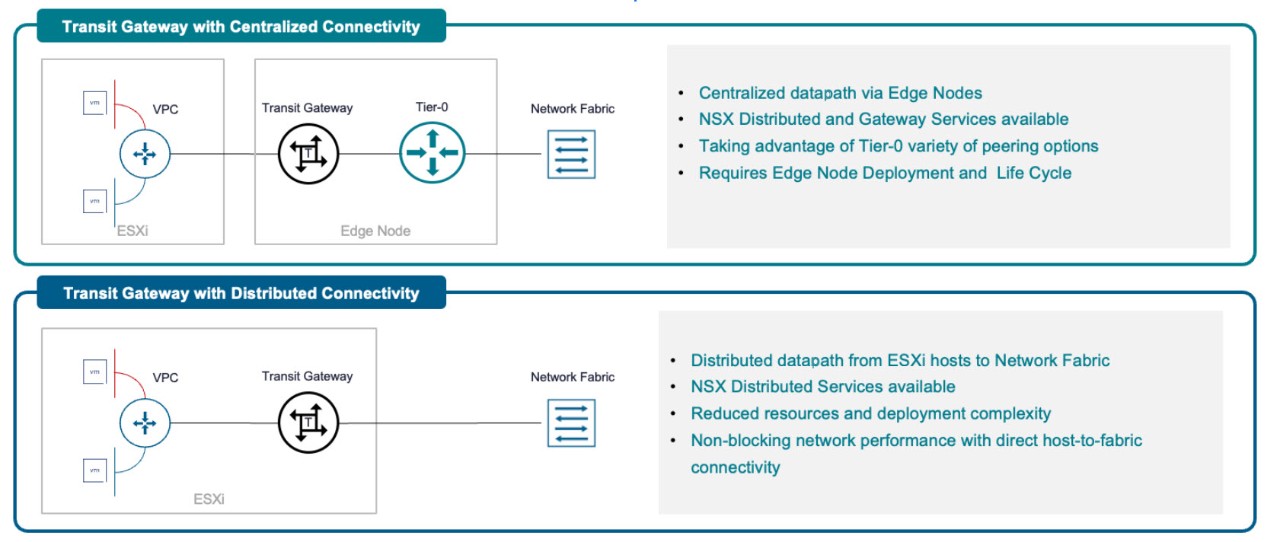

The two options that exist now include:

- Transit Gateway with Centralized Connectivity, which we call the Centralized Transit Gateway (CTGW)

- Transit Gateway with Distributed Connectivity, which we call the Distributed Transit Gateway (DTGW)

The Transit Gateway (TGW) is a new network object that is maintained in NSX in VCF 9. Although it is a native object, you can think of it as an object similar to a T0 VRF. The TGW can have a distributed (Distributed Router) component and it may, if using the Centralized Connectivity option, have a centralized (Service Router) component.

VPC

Virtual Private Clouds, or VPCs, are what I call a “Cloud Consumption Unit”. I call it a “Cloud Consumption Unit” since it creates an atomic unit of VCF Networking that can be divvyed to a specific group (i.e. think Platform Engineering or Lines of Business) through Role-based Access Control (RBAC).

Anything created in a VPC is self-contained meaning if a VPC Admin mucks up their config, it won’t affect any other VPCs.

VPCs permit for network and security policy isolation, further layering onto VCFs multi-tenancy model.

VPCs can provide for rapid segmentation of applications without the need for high-effort application profiling (with tools such as VCF Operation for Networks or vDefend Security Intelligence).

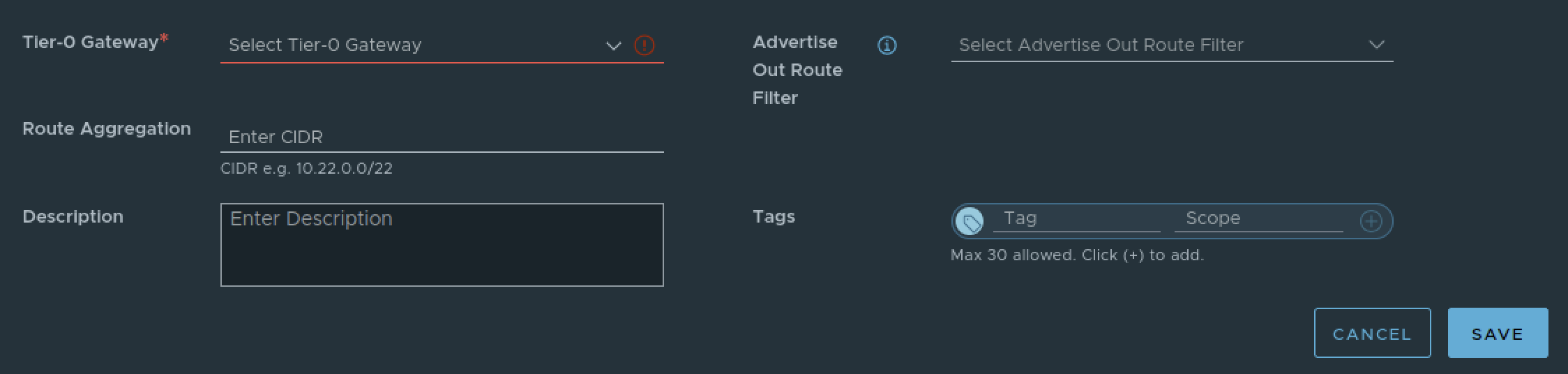

VPC Connectivity Profile

A Connectivity Profile (CP) is a collection of objects that define how VPCs within a Project communicate with each other and with the outside world Northbound.

When a Project is created, a default Connectivity Profile is created with common settings. These settings can be modified and new Profiles can be created to further customize the configuration.

This abstraction allows for Project and VPC admins to not concern themselves with how their Services connect to the outside, while allowing Enterprise Admins to place guardrails around what services Tenants can connect to and how they are connected (things like BGP Peers, Routing Protocols, BFD configuration, EVPN config, etc.).

The objects include a Transit Gateway (TGW) (Centralized or Distributed), a Tier-0 Gateway, External and/or Private - Transit Gateway IP Blocks, and depending on the Transit Gateway type, it may include Route Aggregation, Route Filtering, VLANs and other details.

VPC Service Profile

The Service Profile defines things like DHCP Server / Relay configuraiton, as well as Subnet Profiles for things like QoS, Spoofguard, etc.

Subnet

Subnets are networks within a VPC. They are essentially NSX Overlay Segments, but scoped to a VPC. They can either be Public (routable), Private with VPC scope (the NAT boundary is the VPC), or Private with Transit Gateway scope (the NAT boundary is the Transit Gateway, so VPCs within the Transit Gateway use Private IP addresses to communicate with each other).

Roles

In order to facilitate guardrails around network and security resources, NSX contains Role-based Access Controls (RBAC) for Projects and VPCs. Different functions may be delegated to different roles and assigned to individual resources.

The 3 roles include:

- Enterprise Admin

- The traditional “admin” account in NSX. When it comes to VPCs, their role entales:

- Importing/Creating SSO so different roles can be defined for RBAC

- Creating external IP blocks for consumption by VPCs

- Creating the Edges, T0s, and/or TGWs to support VPCs

- Creating Projects and assigning groups to the Project Admin role

- Defining quotas for Projects

- The traditional “admin” account in NSX. When it comes to VPCs, their role entales:

- Project Admin

- A role that is assigned to Tenant admins that oversee multiple VPCs, i.e. multiple projects or groups within a Line-of-Business:

- Creating VPCs

- Assigning resources and RBAC to VPCs

- Assigning quotas to VPCs

- A role that is assigned to Tenant admins that oversee multiple VPCs, i.e. multiple projects or groups within a Line-of-Business:

- VPC Admin

- A role that is assigned to a team overseeing an individual project or application

- Creating networks and other VPC resources

- A role that is assigned to a team overseeing an individual project or application

VPC Ready

VPCs have received a major upgrade in VCF 9. Not only are there new features related to VPCs in VCF 9, but it has expanded from an NSX-specific capability to a platform wide primitive.

New Features

We call VCF 9 “VPC Ready” because of a number of things:

- NSX Manager is installed as part of the VCF Installer process for the Management Domain as well as any Workload Domains

- The ESX 9 image includes NSX VIBs out of the gate, no need to push VIBs to install NSX anymore! It also means ESX and NSX VIBs are upgraded at the same time.

- A default Project is created and default Connectivity and Service Profiles can be used to get started.

In order to create your first VPC, the major pre-requisite is to definte your External Connectivity. Essentially you need to choose between a CTGW or a DTGW.

Platform Capabilities

When VPCs were released in NSX 4.X, the constructs were mainly paired to the UI and API of NSX - it didn’t feel like a fully integrated solution with the VCF platform.

That drastically changes for the better in VCF 9. Here are some of the ways that expand the use of VPCs in VCF 9:

User Interface

- NSX UI - Of course you can still use the NSX UI to use and provision VPCs. You may login as a Tenant or VPC Admin and you will only have visibility and access to the resources you are entitled to.

- vCenter UI with a default Project (VPC Ready) - Now you can create VPCs, Subnets, and External IPs from vCenter. For more advanced config, use another method.

- VCF Automation Service Catalog item - Using VCFA Blueprints, one could create and attach an application to an existing VPC

- VCF Automation All Apps Organization - I believe this used to be referred to as the Cloud Consumption Interface (CCI). This allows for Kubernetes API access to provision VMs and VKS clusters on top of VPCs, but of course, you can use the UI if you want as well.

- vSphere Supervisor - This is the Local Consumption Interface (LCI) within vCenter, where you can place VMs and VMware Kubernetes Service (VKS) clusters in VPCs. Just like the CCI, you could also use kubectl and create VMs or Containers via the k8s API as well!

- HCX - Migrate to and from VPCs!

- VCF Operations - Montior the stats of your workloads in VPC deployments

- VCF Operations for Networks - Monitor network traffic in and out of your VPCs!

API

- The Kubernetes API via the VCF Automation and vSphere Supervisor interfaces. This is truly a game changer for Platform Engineering teams. They can simply attach to Supervisor using kubectl and provision their own apps on demand. This includes VMs and VKS clusters and means provisioning Subnets, DHCP, Security Policy, Load Balancing, and NAT rules all without interfacing directly with vCenter or NSX!

- The NSX, vCenter, VCF Ops, HCX APIs (basically the UI equivalent of above)

- VCF Automation Terraform Provider - Interface to VCF A via a Terraform provider. Interestingly, it uses the TF Provider for Kubernetes under the covers

- NSX Terraform Provider - Even as a Project or VPC Admin, you can connect to NSX via Terraform and access your resources directly!

- NSX SDK for Java and Python

Conclusion

What else am I missing? How do you plan to use VPCs in VCF? Surely there are other ways we can build on top of this platform. Hit me up on LinkedIn if you’d like to chat about it.

In subsequent posts, I will dig into these new Platform capabilties and see how all of the VCF components come together to create a true Private Cloud experience!